Tiny motions bring digital doubles to life

Researchers at the Max Planck Institute for Intelligent Systems unveil new technology for motion and shape capture

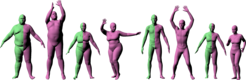

The new technology (MoSh) will help animators jump the “Uncanny Valley” by turning a few moving dots into detailed body shapes that jiggle and deform like real humans. Researchers at the Max Planck Institute for Intelligent Systems in Tübingen, presented their Motion and Shape Capture (MoSh) study, which appeared in the journal ACM Transactions on Graphics, at SIGGRAPH Asia in Shenzhen on December 6, 2014. Devised by a team of researchers under the direction of Michael J. Black, Director of the Perceiving Systems department, MoSh is a method that allows animators to record the three-dimensional (3D) motion and shape of a real human and digitally “retarget” it to a new body shape. With MoSh, realistic virtual humans can populate games, the Internet, and virtual reality, while reducing animation costs for the special effects industry.

Current Motion Capture (mocap) technology uses dozens of high-speed cameras to capture 3D position and motion data from a few reflective markers attached to a person’s body and face. This marker data is then converted into a moving skeleton that controls a digital character, much like a puppeteer controls a puppet. Mocap is widely criticized because this can result in eerily lifeless animations. Consequently mocap serves as only a starting point for the time-consuming and expensive hand animation by experts who put life into the movements of animated characters..

MoSh changes this labor-intensive approach by using a sophisticated mathematical model of human shape and pose, which, is used to compute body shape and motion directly from the 3D marker positions. The MoSh approach lets mocap data be transferred to any new virtual shape automatically. For example, the researchers captured the motion of an elegant female salsa dancer and then changed the body shape to that of a giant ogre, making him look light on his feet. “We can take the motions of one body and simply transfer them to another body resulting in a realistic animation,” says Matthew Loper, the lead author of the study.

And, because MoSh does not rely on a skeletal approach to animation, the details of body shape – such as breathing, muscle flexing, fat jiggling – are retained from the mocap marker data. Current methods throw such important details away and rely on manual animation techniques to apply them after the fact.

“Everybody jiggles,” according to Black, adding: “we were surprised by how much information is present in so few markers. This means that existing motion capture data may contain a treasure trove of realistic performances that MoSh can bring to life.”

Naureen Mahmood, one of the co-authors of the study noted, “realistically rigging and animating a 3D body requires expertise. MoSh will let anyone use motion capture data to achieve results approaching professional animation quality.” This means that realistic digital humans may be coming to video games, training videos, and new virtual-reality headsets.

Opening up realistic human animation to new markets, Max Planck has licensed its technology to Body Labs, a technology company that transforms the human body into a digital platform from which goods and services can be designed, created, and sold. “MoSh has a host of applications,” says William O’Farrell, co-founder CEO of Body Labs. “The obvious application is enhancing the quality and reducing the cost of animations from mocap; but, we also see extensive uses in apparel. MoSh makes high-end effects accessible to the clothing industry and finally allows clothing designers and customers to easily visualize garments on realistic moving bodies.”