Dendritic constancy - equality in the brain

Researchers find principle of dendritic constancy

Equal rights in the brain? A new paper by researchers of the Ernst Strüngmann Institute shows that the call for equal rights also affects one of the smallest components of the brain: the dendrites. These are the sections of nerve cells that receive and transmit stimuli.

We know that neurons of different shapes perform different computations. But do the different shapes also help to make their functions more similar? Hermann Cuntz, researcher at the Ernst Strüngmann Institute (ESI) for Neuroscience, and colleagues report that neurons with vastly different sizes and shapes of their processes, called dendrites, display surprising equality. The researchers termed this new principle dendritic constancy.

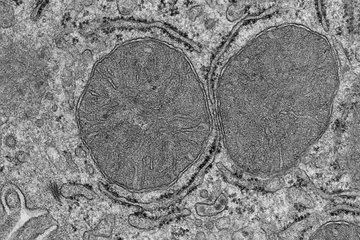

In the 1960s, Wilfrid Rall successfully applied equations from cable theory to neurons. It meant that he could calculate the propagation of currents in dendritic trees, the input structures of neurons. Until then, the contribution of dendrites to neuronal function had been largely ignored. But Rall could show that electrical signals from individual inputs across the dendrites attenuated dramatically and could interact in sophisticated ways – a turning point for our understanding of neural computation.

Rall’s cable theory is today the basis for all detailed models representing single neurons or entire circuits in the brain. Meanwhile, because of the distinct shape of their dendrites, different cell types have been attributed to a wide variety of electrophysiological behaviors.

Responses are independent of shape or size

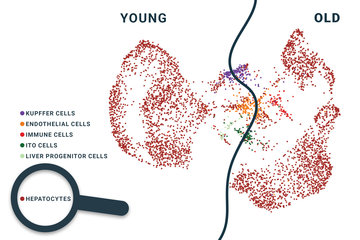

In their recent paper, Cuntz and colleagues explored a feature of cable theory that seems to generalize across diverse dendritic trees: instead of following the impact of single inputs, the authors considered synaptic activity when it distributes across the whole or parts of the dendritic tree. Interestingly, the cable then tends to collapse to a single point, making the neuron’s responses independent of the dendrite’s shape or size.

This means that the input-output function of neurons may conveniently not change during development when dendrites undergo massive structural changes. Hence the term “dendritic constancy” to name this phenomenon. The constancy of dendrites may not only play a role during development but may also be of relevance in the context of neurological diseases. Early during the course of such a disease, neurons not yet affected remodel their trees to maintain information processing in the neuronal network for as long as possible.

Thus, dendritic constancy is a naturally occurring mechanism partially counteracting the damage caused by various brain disorders such as Alzheimer’s disease or epilepsy. As a result, dendritic constancy may help to delay the onset of disease symptoms, such as memory loss.

Contribution to the 3R

This computational work was a collaboration between the Cuntz lab at the ESI, Thomas Deller from the Anatomy department of the Goethe University in Frankfurt, and the group of Peter Jedlicka at the newly established 3R Center of the Justus Liebig University in Giessen. Indeed, in line with the 3R principle of animal welfare (reduce, replace, refine), the work has shown to be a prime example for computational models being excellent tools for exploiting shared experimental datasets thereby potentially reducing the number of new required animal experiments.

So in a way, neurons are more equal than one may think. Importantly, however, dendritic constancy does not diminish the importance of the large palette of synaptic learning rules and local computations in dendrites that make neurons unique and are sure to keep neuroscientists on their toes for times to come. Nevertheless, the authors believe that seeing how dendrites can behave equally across scales will contribute to a better understanding of general principles in neuronal function.

Intriguingly, a recent follow-up study has shown that the normalization of synaptic input weights based on the dendritic constancy mechanism improves the learning performance in artificial neuronal networks. Thus, dendritic constancy may be of interest not only for neuroscience but also for the machine learning community.