Colour patch could throw self-driving vehicles off track

Machine learning methods which calculate movements are susceptible to interference

A team of researchers in Tübingen show that optical flow systems based on deep neural networks – a likely component of future autonomous cars – are vulnerable to adversarial attacks. The computer vision experts are shaking up the automotive industry by warning car manufacturers around the globe that it could take a simple colour pattern to put the brakes on computer vision systems in autonomous cars.

A colour patch printed on a t-shirt, or as a bumper sticker, or displayed on a grocery bag could be a problem for autonomous cars; a small pattern that creates so much noise it could become safety critical. “It took us three, maybe four hours to create the pattern – that’s all,” says Anurag Ranjan, a Ph.D. student in the Perceiving Systems department at the Max Planck Institute for Intelligent Systems (MPI-IS) in Tübingen. He is the lead author of the publication “Attacking Optical Flow”, a research collaboration of the Perceiving Systems department and the Autonomous Vision group at the MPI-IS and the University of Tübingen. The paper is accessible on arXiv and due to be presented at the leading international computer vision conference, the International Conference on Computer Vision ICCV, which kicked off on October 27 in Seoul.

The risk of affecting any cars on the road is extremely low but, in the interest of caution, the researchers informed the major car manufacturers that are deploying self-driving technology about the risk several months ago to give them time to react if needed.

In their research work, Anurag Ranjan and his colleagues Joel Janai, Andreas Geiger and Michael J. Black challenge the robustness of several different optical flow systems. Such systems are used in self-driving cars, in robotics, medicine, video gaming, or navigation to name a few. Optical flow describes the motion in a scene captured by the onboard cameras. Recent advances in machine learning have produced faster and better methods for computing this motion, but this new research shows that such methods are vulnerable to “attack”, simply by placing a printed pattern in the scene. Even if the pattern is not moving, it causes the algorithm to think that large parts of the scene are moving in the wrong direction.

Previously, researchers showed that such patches could confuse neural networks trained to classify objects such as stop signs. This new work is the first to show that “regression” problems are also vulnerable to simple attacks. In such problems the computer estimates a continuous value such as depth or speed. The vulnerability of optical flow methods to attack suggests that other problems like stereo are similarly vulnerable. However, when used in safety-critical applications like autonomous cars, such systems need to be “robust” to such attacks.

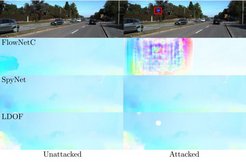

Even a small patch generates a large effect

Sensitive intelligence: The first column shows the optical flow results using an encoder-decoder architecture FlowNetC, a spatial pyramid architecture SpyNet and classical method LDOF. In the second column, a small circular patch is added to both frames at the same location and orientation (highlighted by the red box for illustration purpose). SpyNet and LDOF are barely affected by the patch. In contrast, FlowNetC is strongly affected, even in regions far away from the patch.

Ranjan and his team took on the task in March last year. On their journey, it took them by surprise how small the patch can be to wreak havoc. Even at a size tinier than 1% of the overall image, the system could be attacked, making severe errors in its estimates affecting half the image region. It gets worse the bigger the patch. “This is a concerning threat, as in many cases, the flow system completely erased the movement of objects in the entire scene”, Ranjan says and points to a video showing the attacked system. It is up to everyone's imagination what damage a paralyzed flow system of a self-driving car can cause on a road at high speed.

How each self-driving car operates is a well-kept secret among manufacturers, hence the computer vision research community can only guess. “Optical flow gives information about the world and how objects are moving so it may be a component of existing systems” says co-author Michael J. Black, Director of the Perceiving Systems department. “This work shows the makers of self-driving technology that there is a new possible threat and enable them to train their systems to be robust to such attacks.”

Maybe as important as the attack is that it teaches the researchers how to make better optical flow algorithms using a “zero flow” test. “If we show the system two input images that are identical with no motion between them, the optical flow and the neural network should produce zero flow. But this is often not the case, even without any attack. We can use this to “debug” what the network is doing wrong,” says Ranjan. He and his team hope their research will help raise awareness, that car companies take such attacks seriously, and create their systems in such a way that they are less vulnerable.