Robots learn human perception

Michael J. Black teaches computers to analyse data on their environment as quickly and reliably as the human brain.

Text: Tim Schröder

"I really like Tübingen," says Michael J. Black. "The city, its surroundings. My wife and I love hiking. The Swabian Alb is fantastic!" Michael J. Black intends to stay here permanently. He is now in his late forties. "That means I still have about 20 years to carry out my research." And he is pretty certain about what he wants to achieve in this time. "I want to teach a robot to be as familiar with the world as a two-year-old child." This may not sound like a lot at first, but it would actually be a sensation – after all, two-year-olds are pretty darned clever.

Newborn babies have a strong grip. They have strong grasp reflexes, which is evident when they grab your finger for example - but that is about all they can do. A two-year-old child, however, is already an expert when it comes to grasping and has dozens of gripping variations. For instance, they can gently lift objects and hold a spoon. Small children can competently move round angular and pointed objects in their hands, and they are also capable of abstraction. They can recognise angular objects as angular objects and round objects as round objects, regardless of whether the object has three, four or five corners or curves – and regardless of whether this is the first time they have seen the object.

It is this abstraction ability that is still missing from the brain of a computer today. "Human beings analyse their environment within fractions of a second," says Black. "All we need to do is glance at a surface to know whether it is slippery or not." A computer has to carry out extensive calculations before it can disentangle the input from its sensors and identify what something is. The human brain, however, picks a few basic characteristics from the storm of sensory stimuli it receives and comes to an accurate conclusion about the nature of the world around us.

This is precisely what Black envisages for his computers: to be capable of generalisation and abstraction, to be able to infer characteristics from a small amount of information. Yes, a technical system can process thousands of data, figures and measurement values and analyse the atomic structure of a floor tile – yet a robot would probably still slip on a floor that has been freshly mopped. Black's central question is which environmental stimuli are important. How does the brain manage to deduce so much from so little – and safely guide us through our lives? And how do we teach this to computers and robots?

Reduce complexity

Black is one of three founding directors that will head the restructuring of the Max Planck Institute for Metal Research to focus on the area of intelligent systems. Up until a few weeks ago, he held the Chair for Computer Sciences at the Brown University in Providence in the US state of Rhode Island. Here, he worked closely with neurosurgeons. It was clear to him that he had to be able to understand both – the computer and the workings of the human brain. He developed statistical computing processes, so-called estimators, which reduce the complexity of environmental stimuli to a required extent, just like the brain. Thanks to these estimators, the computer does not get lost in the massive volume of data.

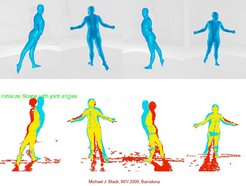

With this procedure, he is gradually approximating the environment – something he calls “approximative visual physics”. Black is focusing primarily on vision, on movements in particular, as these are especially strong stimuli for the human brain. From the jumble of light reflexes, shadows and roaming pixels of a film sequence, his computing processes can now extract objects that have been moved – just not as swiftly or as simply as the brain. For this reason, the brain continues to be his greatest teacher.

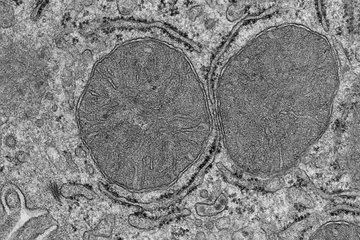

Black's medical colleagues in the US planted tiny electrodes in the brains of paraplegic patients in the areas of the brain responsible for movement – the motor cortex. They then analysed the stimulation of the nerve cells. Nerve cells send out extremely fine electrical impulses when they are stimulated, and the electrodes detect these extremely fine electric shocks. Such electrical stimulation initially does not look much different to a noisy television screen. Black has succeeded in identifying and interpreting clear activation samples from this flickering. Thanks to his mathematical procedure, the computer was able to translate the thoughts of the patients into real movements: simply through the power of thought, the patients could move the cursor on a computer monitor. These links between the brain and computer are called brain-computer interfaces by experts.

Black has analysed the activity sample from the motor cortex and hopes to be able to draw conclusions for the programming of computers. Particularly interesting is that the motor cortex of a human being also becomes active if the person only observes movement, even if the body itself is completely motionless. "Apparently, there is a relationship between a person's knowledge of movement and the person's observation of movement," says Black.

Such findings could be extremely important for future learning strategies for computers. In Tübingen, Black is thus not only working closely with his direct colleagues Bernhard Schölkopf and Joachim Spatz, but also with experts from the neighbouring Max Planck Institute for Biological Cybernetics, such as the working group headed by Heinrich H. Bülthoff, which is researching perception phenomena and the interaction between humans and robots.

A fundamental reason for moving to Tübingen was the opportunity to work on "something really big". "The Max Planck Society allows a scientist to carry out basic research over a period of years in order to understand the basic principles. This is extremely rare in the US," says Black. "Many projects have short terms of about three years, and they then have to deliver clear results. You end up gradually swinging from one small project and one small scientific question to the next – and you simply hope that you will be able to fit everything into the big picture at the end." In Tübingen, on the other hand, the topic of artificial perception and artificial vision can be tackled from the ground up – and over a period of years.

"I would like to understand the neuronal control of movement, understand the models after which the brain is patterned and then transfer these basic principles to the artificial world." So we will know in 20 years whether his machines can actually be as clever as a small child. The challenge is immense, as machines can fail in even the most minor trivial tasks. Put a spoon, rubber ball and cleaning cloth into the hand of a robot one after the other and you certainly won't be bored, as the steel servant will awkwardly play around with these objects for a while before finally understanding what it has to do with them. In the time it takes for this, a two-year-old child will have long ago lost interest and moved on to the next toy.