Sensory perception is not superficial brain work

How does the brain decide which of the senses it will focus attention on when two interact? For the first time, scientists measured the sensory signals at different depths in the cortex.

If we cross a road with our smartphone in view, a car horn or engine noise will startle us. In everyday life we can easily combine information from different senses and shift our attention from one sensory input to another - for example, from seeing to hearing. But how does the brain decide which of the two senses it will focus attention on when the two interact? And, are these mechanisms reflected in the structure of the brain?

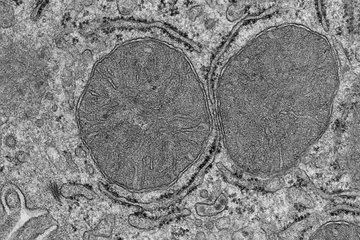

To answer these questions, scientists at the Max Planck Institute for Human Cognitive and Brain Sciences in Leipzig and the Computational Neuroscience and Cognitive Robotics Centre at the University of Birmingham measured how sensory stimuli are processed in the brain. In contrast to previous studies, they did not restrict their observations to the surface the cerebral cortex. For the first time, they also measured the sensory signals at different depths in the cortex. The researchers' findings suggest that our brains conduct the multi-sensory flow of information via distinct circuits right down to the smallest windings of this highly folded brain structure.

While the participants in their study were lying in a magnetic resonance tomograph (MRI), the scientists showed them visual symbols on a screen while simultaneously playing sounds. In a prior condition, the participants had been asked to explicitly focus their attention on either the audible or visible aspect of the stimuli. The neurophysicists Robert Turner, Robert Trampel and Rémi Gau then analyzed at which exact points the sensory stimuli were being processed. Two challenges needed to be overcome. "The cerebral cortex is only two to three millimeters thick. So we needed a very high spatial resolution (of less than one millimeter) during data acquisition," explains Robert Trampel, who co-directed the study at the Max Planck Institute. "Also, due to the dense folding of the cerebral cortex, we had to digitally smooth it and break it down into different layers, in order to be able to precisely locate the signals. This was all done on a computer of course."

The results showed that when participants heard a sound, visual areas of their brains were largely switched off. This happened regardless of whether they focused on the audible or visible aspect of the stimuli. However, if they strongly attended to the auditory input, brain activity decreased, particularly in the regions representing the center of the visual field. Thus, it seems that sound can strongly draw our attention away from what we're looking at.

In auditory brain regions the researchers also observed, for the first time, that the activity pattern, across different cortical layers, changed when participants were presented with only sounds. The situation was different when participants only perceived "something to the eye": in that case there was no change. Rémi Gau sums up, "So when we have to process different sensory impressions at the same time, different neuron circuits become active, depending on what we focus our attention on. We have now been able to make these interactions visible through novel computerized experiments."